Migrating to the Melbourne Research Cloud

Introduction

As discussed at Getting Access for an existing Nectar Project, there are situations when you may want to migrate resources such as virtual machines from a Nectar Node to the Melbourne Research Cloud.

There is similar (and more comprehensive) information about how to do this in Nectar's own documentation:

The current document will provide a brief summary and focus on the case of migrating to MRC.

Simplest Scenario: No Storage, Default Networking, No Databases, No Volumes Storage

1. Collect Information

When you create a new instance, even though it will be based on a snapshot of the old instance, it won't automatically inherit all the old instance's settings.

In particular, you will need to choose the Security Groups and Flavor from scratch.

You should also make sure you know which SSH keys were installed into the original instance. If you are happy with the existing keys, there is no need to add them again to the new instance. However, you will have the option of adding new ones if you wish.

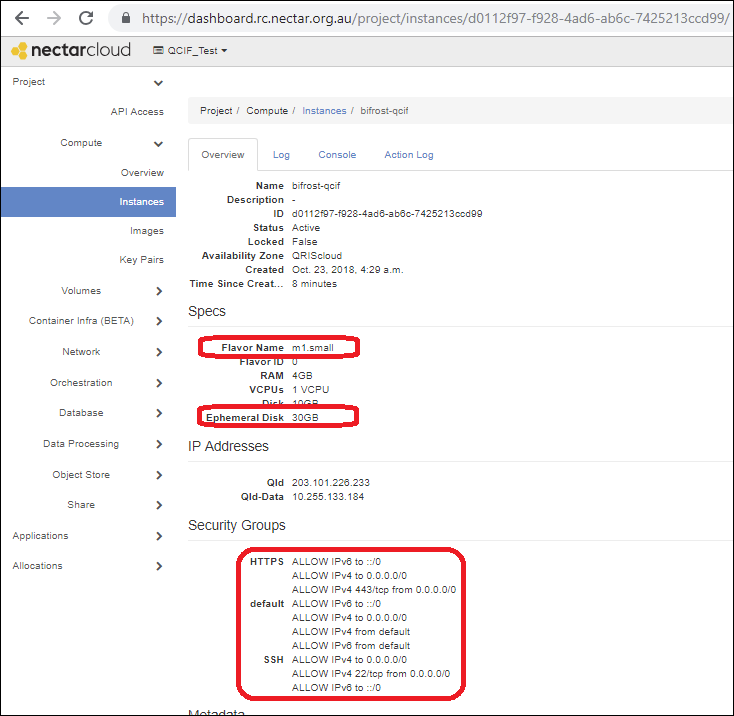

You can get most of the information you need from the detailed information page for the instance.

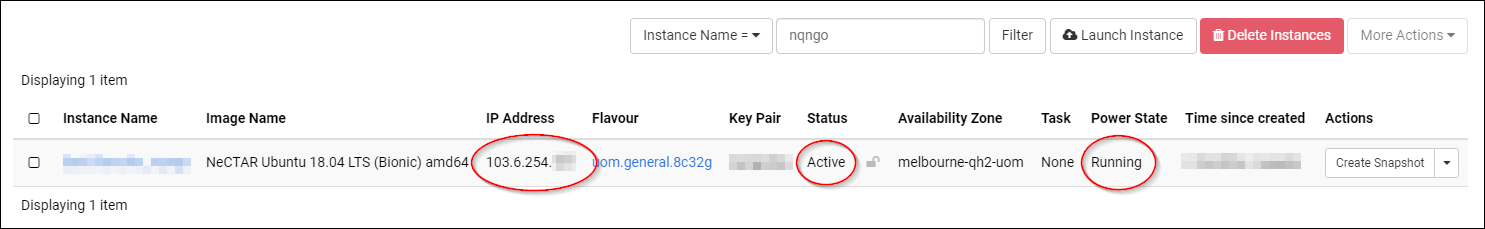

Select the required instance at https://dashboard.rc.nectar.org.au/project/instances/, or navigate the dashboard menu using Project → Compute → Instances. Once you are on the Instances page, click on the instance you intend to migrate. Here is a screenshot of the detailed information for an instance:

You can see that the Instance Overview includes useful information (highlighted) needed for the migration, such as the Flavor and Security Groups.

This screenshot also shows a potential complication to consider: this instance is running on a Flavor that offers ephemeral storage.

If you are not using the ephemeral storage then you have nothing to worry about. If you are using your ephemeral storage then you need to back it up separately, as ephemeral data is not captured at the snapshotting step. More about storage and backing up ephemeral data will be covered in Section 3.

For this scenario, it is important to confirm that you are not using any ephemeral storage before you proceed.

2. Shutoff the instance

Although live snapshotting is possible, we advise you to shut off the instance before you snapshot. This will help ensure your migration goes as smoothly and quickly as possible.

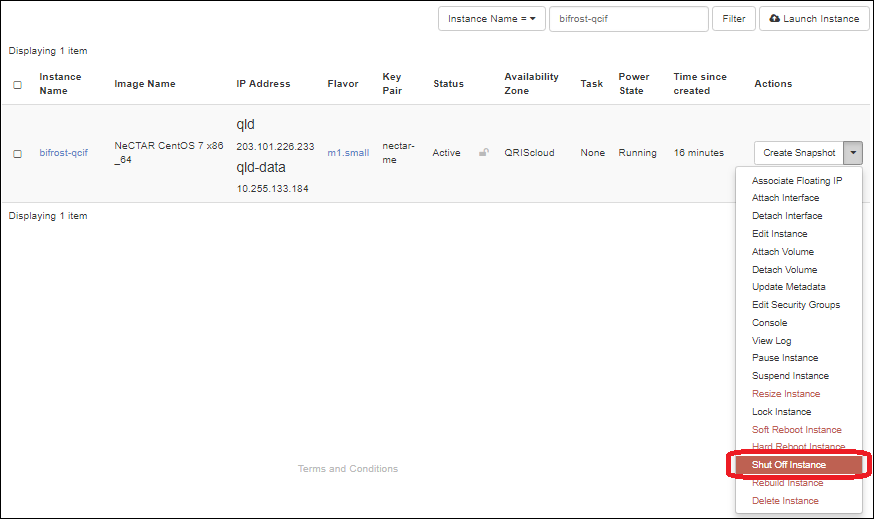

- Go to the Instances page: Project → Compute → Instances.

- Find the row associated with the instance that you are migrating.

- Open the Actions menu from the button at the right-hand end of the row.

- Click Shut Off Instance.

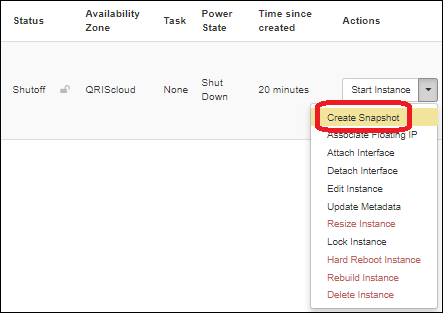

You need to wait for the instance to shut off. The Task column will say Powering Off. Eventually you will end up with a Status of Shutoff and a Power State of Shut Down:

3. Create a Snapshot of the instance

Once the instance is shut off, select Create Snapshot from the Actions menu:

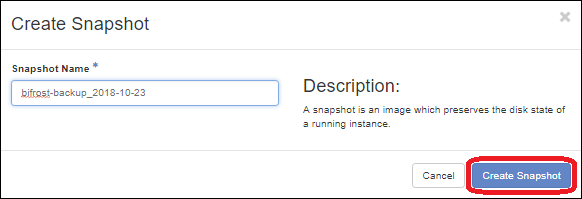

This will open the Create Snapshot dialogue:

Start the snapshotting process by entering a descriptive name and clicking the Create Snapshot button.

This is the most time-consuming part of the migration procedure. It is worse for instances with large Disk space, but even a small instance requires some patience.

4. Launch a new Instance from the Snapshot on MRC

MRC shares the same snapshot database as Nectar cloud so your snapshot is ready immediately on MRC.

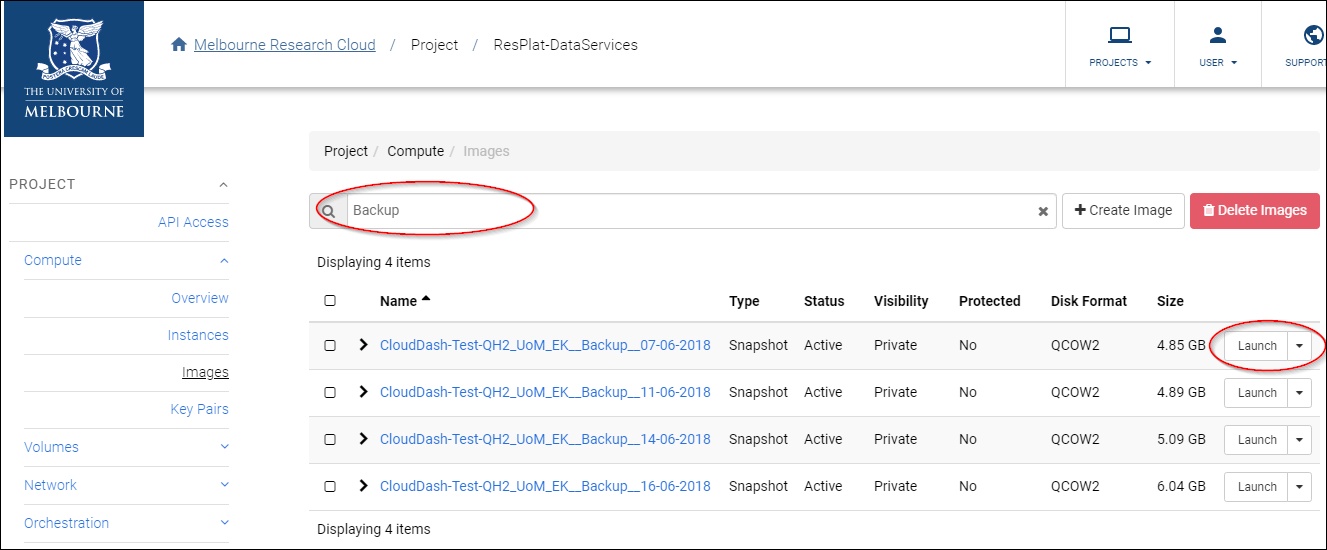

- Login to MRC dashboard and go to the Images page: Project → Compute → Images

- Type the snapshot name in the filter area above the snapshot list.

- When you see the snapshot you just made, click the Launch button for that snapshot.

Clicking Launch will bring up the dialogue for launching instances.

In the Details tab, enter an Instance Name.

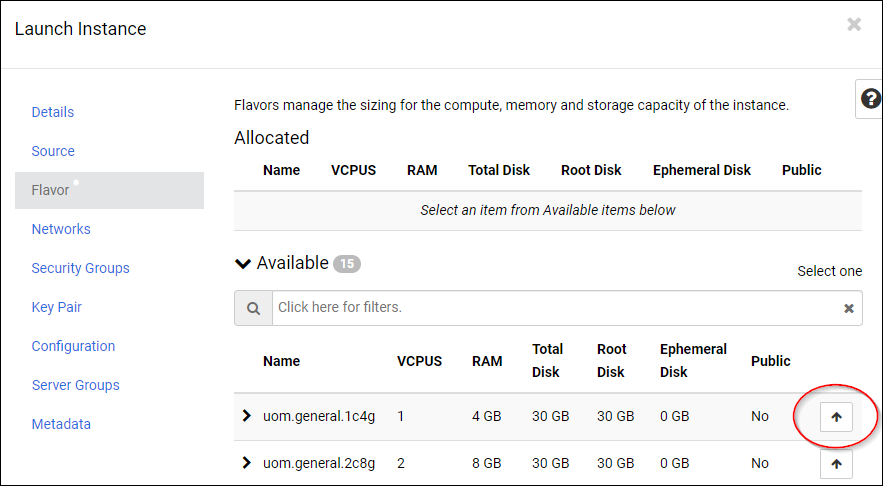

In the Flavors tab, select a UoM flavor with sufficient capacity for your instance.

In MRC, our flavor is aligned into optimised cpu/RAM ratio for our physical hypervisors.

Check your previous instance vCPU/RAM amount and select an equivalent uom.general flavor.

In the Networks tab, change it to qh2-uom if you would like to retain keep your network behaviour from Nectar cloud.

We recommend you use the qh2-uom-internal network however. Using this network, your VM can only be accessible from within the University network or over Unimelb VPN.

This offers greater security over the default public IP option because your instance is not accessible from elsewhere by default.

For more information, please refer to the following article: Using UoM Internal Network.

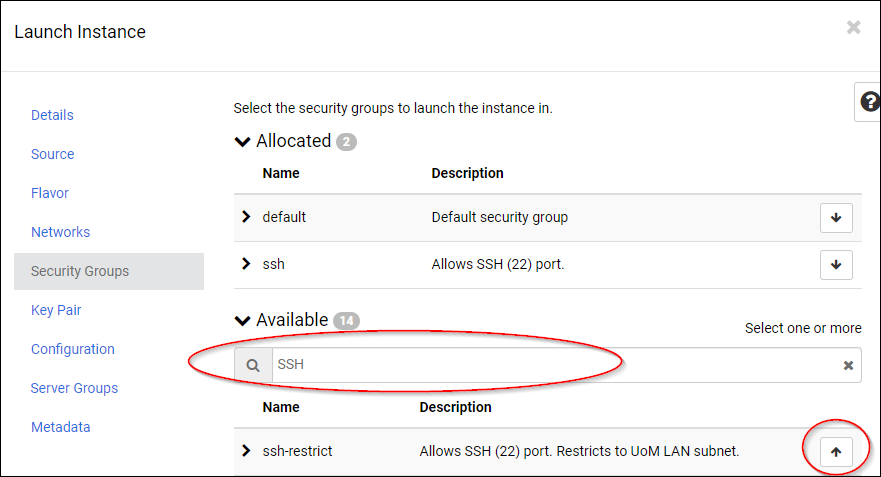

On the Security Groups tab, be sure to allocate the same security groups you noted earlier, HTTPS, SSH, and default in this example.

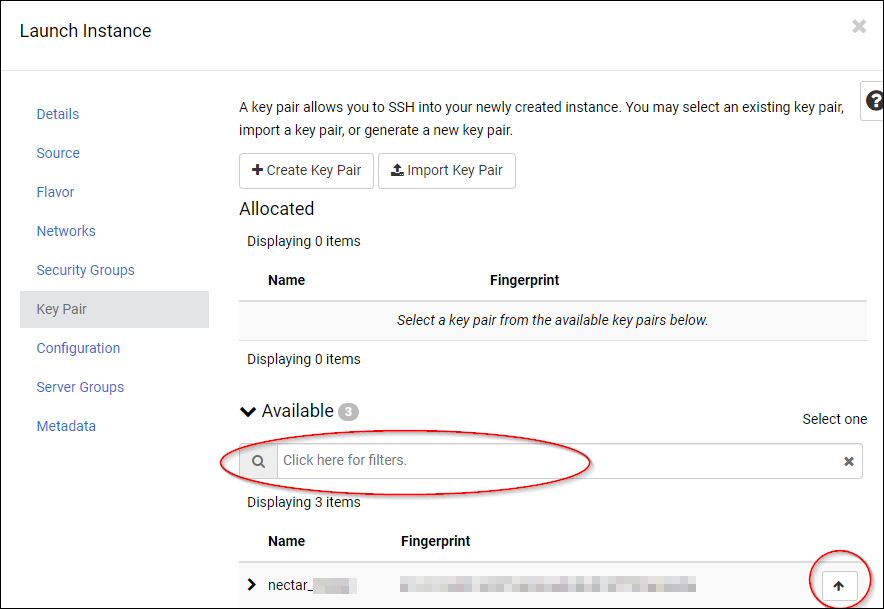

On the Key Pair tab, select the required key, or add a new key if you wish.

Click the Launch Instance button to launch. It might take a some time but if you go to the MRC Instances page, you should eventually see the new instance running in its new home.

You should now be able to SSH into the new instance using the new IP address which is displayed on the Instances tab, using the required key and username.

Once you have tested that the new instance is working as expected, please delete the old instance as directed. If you were not directed to delete the instance after migrating, please feel to delete it to avoid tying up resources you no longer need. You should not delete the old instance before testing that the new instance works, as you may need to modify the old instance and/or re-snapshot it to resolve any problems.

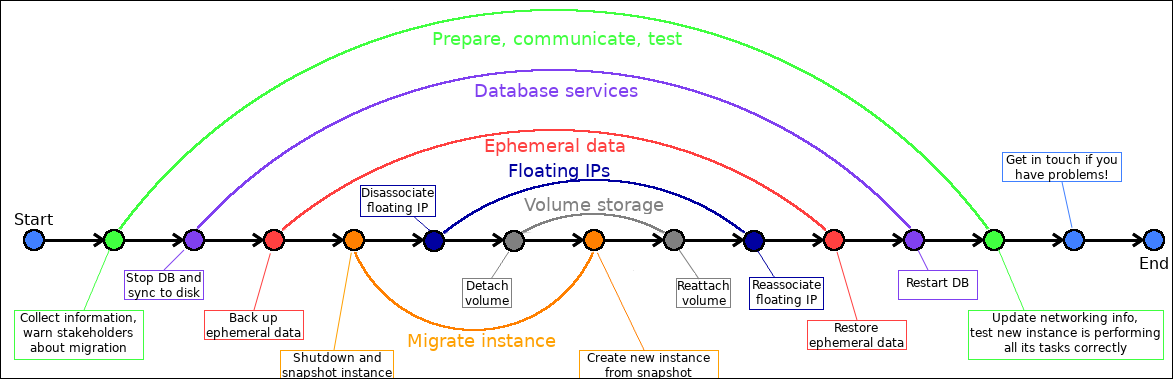

Flowchart for navigating non-trivial scenarios

In the flowchart, you can skip any circles that don't match your situation. Everyone will need to do the light blue, green and orange circles (respectively start/end, prepare/communicate/test, shutdown/snapshot/relaunch). Many people can skip purple, pink, dark blue and grey (database stop/start, ephemeral data backup/restore, disassociate/re-associate floating IP, detach/reattach volumes).

Detailed Information For Important Steps

1. Collecting Information

At minimum, repeat Step 1 from the Simplest Scenario. Other information that you should collect, especially if you know that you have a more complicated situation, includes:

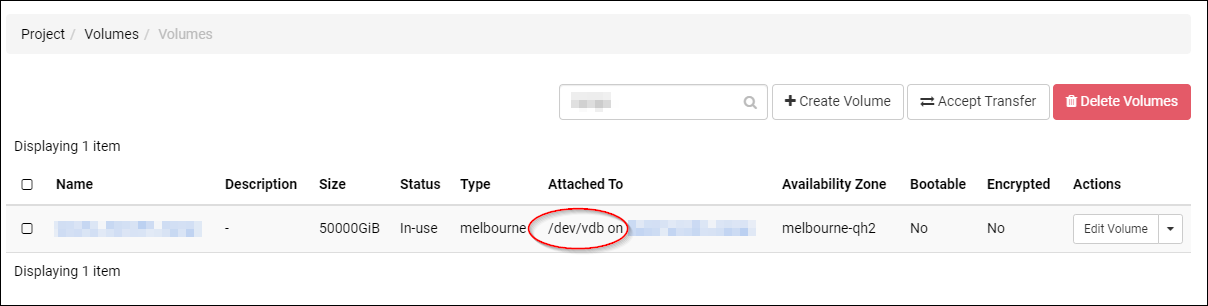

- What volumes are attached to the instance? (Look in, for example, Project → Volumes → Volumes on the dashboard.

If your instance is not listed in the Attached To column of the volumes list, you can skip all instructions concerning volume management.)

If volumes are attached, you should see the instance mount point (such as

/dev/vdb) listed in the Attached To column of the volumes list. (You should also see the mount point on the instance itself, from the/etc/fstabfile and thedf -hcommand.) - Is the ephemeral storage actively used? Does a backup plan already exist for managing it? When was the last backup?

- Who are the instance's stakeholders? This could be services running either on other instances or externally that depend on this instance. You should also review the login patterns of users. (If your instance is running Linux, the last command is useful for this.) Stakeholders should be notified about the expected outage before the migration, and the new IP address afterwards.

- Is there a domain name associated with the instance's IP address?

- Does the instance access external services that have firewall/whitelisting rules in place which will block the new IP address upon migration?

Using the Volumes filter area to identify volumes attached to an instance and their mount points.

Once you have all this information, you should have a good understanding of what is needed to complete the migration, and how everything fits together.

2. Backing Up and Restoring Ephemeral Storage

Nectar has existing documentation that covers this topic:

3. Detaching and Attaching Volumes

If you are migrating your instance from melbourne-qh2 to MRC, there is no need to migrate the volume at all.

The volume will be visible from both as both AZs reside within the same data centre.

If you are in a different AZ other than melbourne, migrating volume storage is more complicated.

Although a snapshotting option exists for volumes as for instances, each volume snapshot is usually constrained to a single zone.

This means the solution that works for instances will not work for volumes.

If you really need to transfer the contents of a volume from one zone to another, you need to make sure you have a volume storage allocation at both regions, and even then your options are limited. (In particular, it is not usually possible to do it using the NeCTAR dashboard.)

You can try using the Cinder API client; some of the existing documentation will point you in the right direction:

Some locations even have a Cinder Backup option available in the dashboard.

Even when Cinder options exist, it is usually best to do the following:

- Create a new volume in MRC.

- Then use traditional syncing and backing up tools like

rsyncorscpto transfer the data over. (This is covered in the above links to our Backup documentation.)

4. Volume Reassignment (Only from melbourne-qh2)

For the simple case of reassigning a volume to a new instance within the same data centre (despite being in a different AZ), a brief walk-through follows.

Some of the steps are identical to the tutorial in Section One of this guide, and are only covered at a high level here.

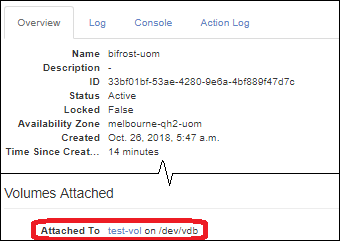

We consider the scenario where you have an instance bifrost-mel with a volume test-vol attached.

Due to a local re-organisation you have been asked to migrate it from melbourne-qh2 to melbourne-qh2-uom.

These AZs are both run out of the same data centre, so it will be simple to reassign the volume after migrating the instance it is attached to.

Shutdown the instance bifrost-mel in the usual way.

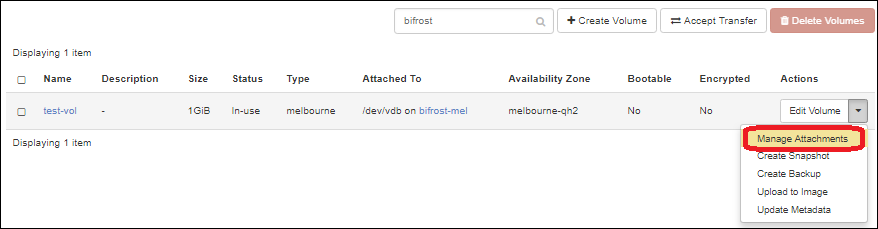

After bifrost-mel has finished shutting down, detach test-vol from bifrost-mel:

- Go to Project → Volumes → Volumes

- Identify the row containing

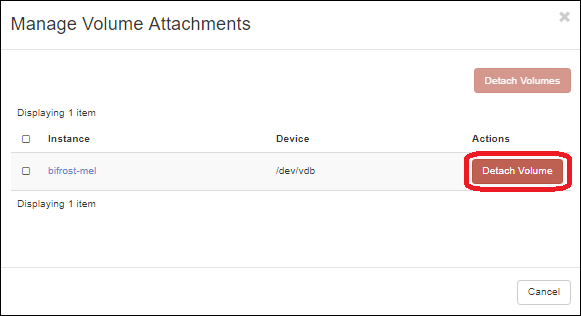

test-voland select Manage Attachments from its Actions menu on the right. - In the dialogue that pops up click the Detach Volume button next to

bifrost-mel. - Confirm the detachment when prompted. You may need to refresh the browser window to confirm the volume is no longer attached.

Next, create an instance snapshot of bifrost-mel in the same way as discussed earlier.

Then go to Project → Compute → Images and launch a new instance from the bifrost-mel snapshot.

You may reuse the same name if you wish, but we are using bifrost-uom to avoid any confusion.

When creating the new instance, remember to set the Security Groups, and select melbourne-qh2-uom in the Availability Zone list.

If you use the same name here as the original instance, you should make a note of the new instance's ID.

You will need this in the next step to tell the difference between the two instances.

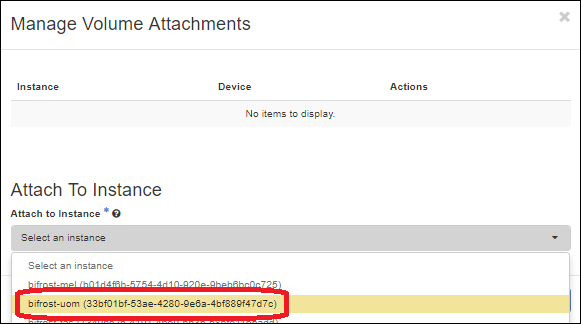

Attach test-vol to the new instance.

- Go to Project → Volumes → Volumes.

- Identify the row containing

test-voland select Manage Attachments from its Actions menu on the right. - In the drop-down list of possible instances, select the new instance. (Now you can see why you needed the instance id!)

- Click the Attach Volume button.

Go to Project → Compute → Instances

- If the new instance isn't already started, you may start it now.

- Test that the new instance is working correctly and that you can use the attached volume storage in the same manner as you did for the old instance.

- Once you are satisfied that everything is okay, the old instance can be deleted.

Getting Help

If you're not dealing with the simplest scenario, migrations can be quite complicated as you try to juggle interactions between your compute, storage, and networking. If you require support, feel free to raise a ticket: